40% Reduction in Idle GPU Time

Real-time visibility and optimization lowered GPU underutilization across environments.Global FSI Customer

60% Faster Root-Cause Diagnosis

AIFO cut MTTR in half by tracing AI performance issues to infrastructure bottlenecks.Healthcare Provider

15% Lower Power Usage

Energy analytics revealed throttled GPUs, enabling targeted optimization and cost savings.AI Lab – USA

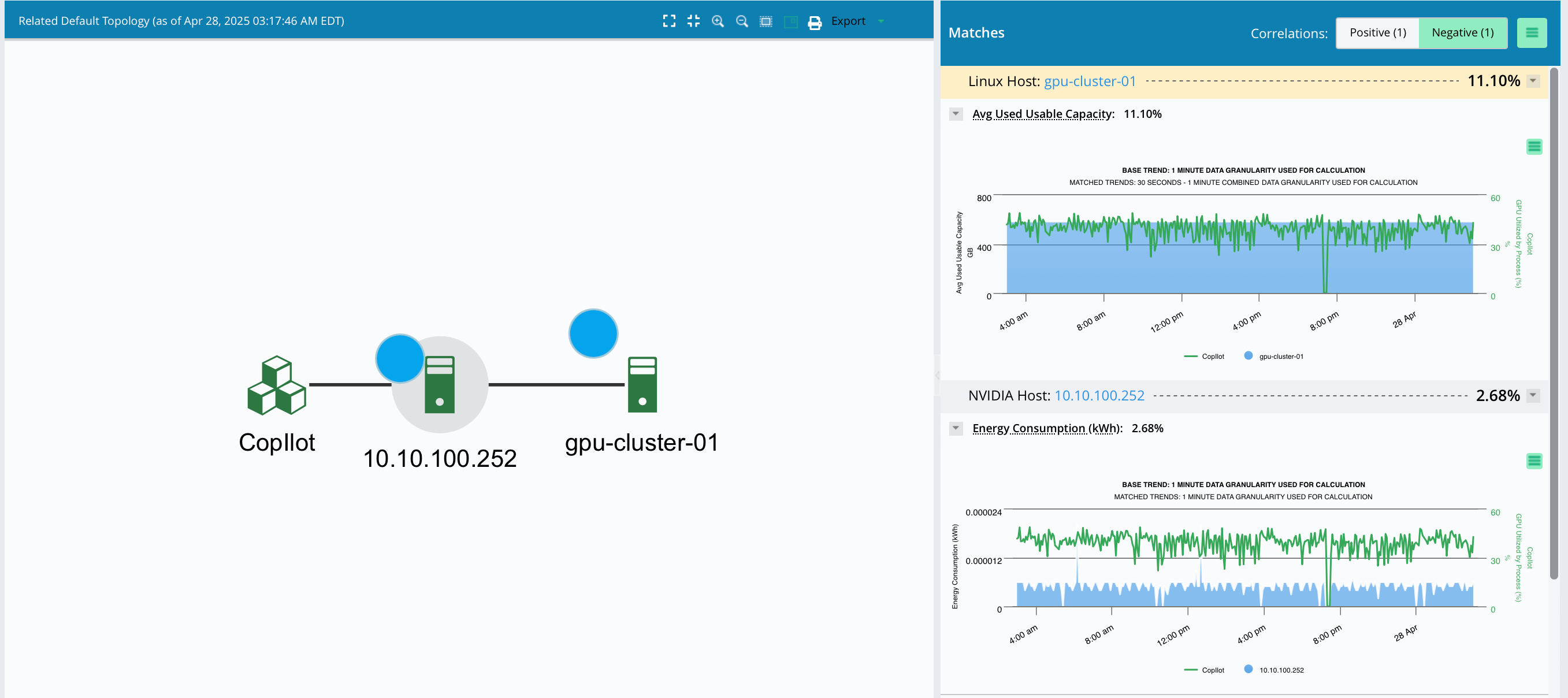

Correlate Infrastructure with AI Performance

- Map AI jobs to the exact GPU, host, network, and storage resources they use.

- See every step in the AI pipeline—from data ingress to model inference.

- Integrates Virtana Container Observability, Infrastructure Observability, and third-party monitoring tools for seamless top-down visibility across layers.

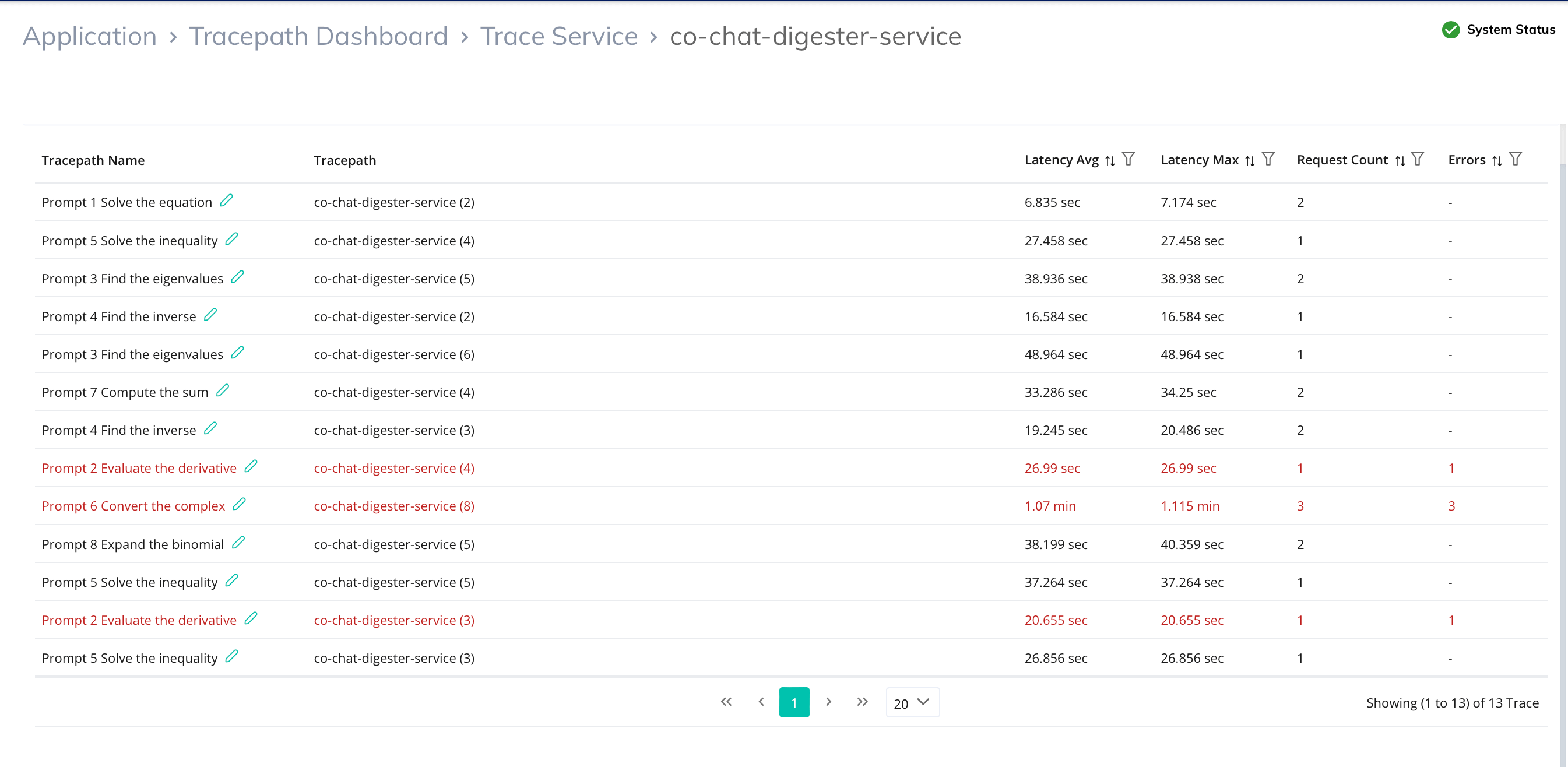

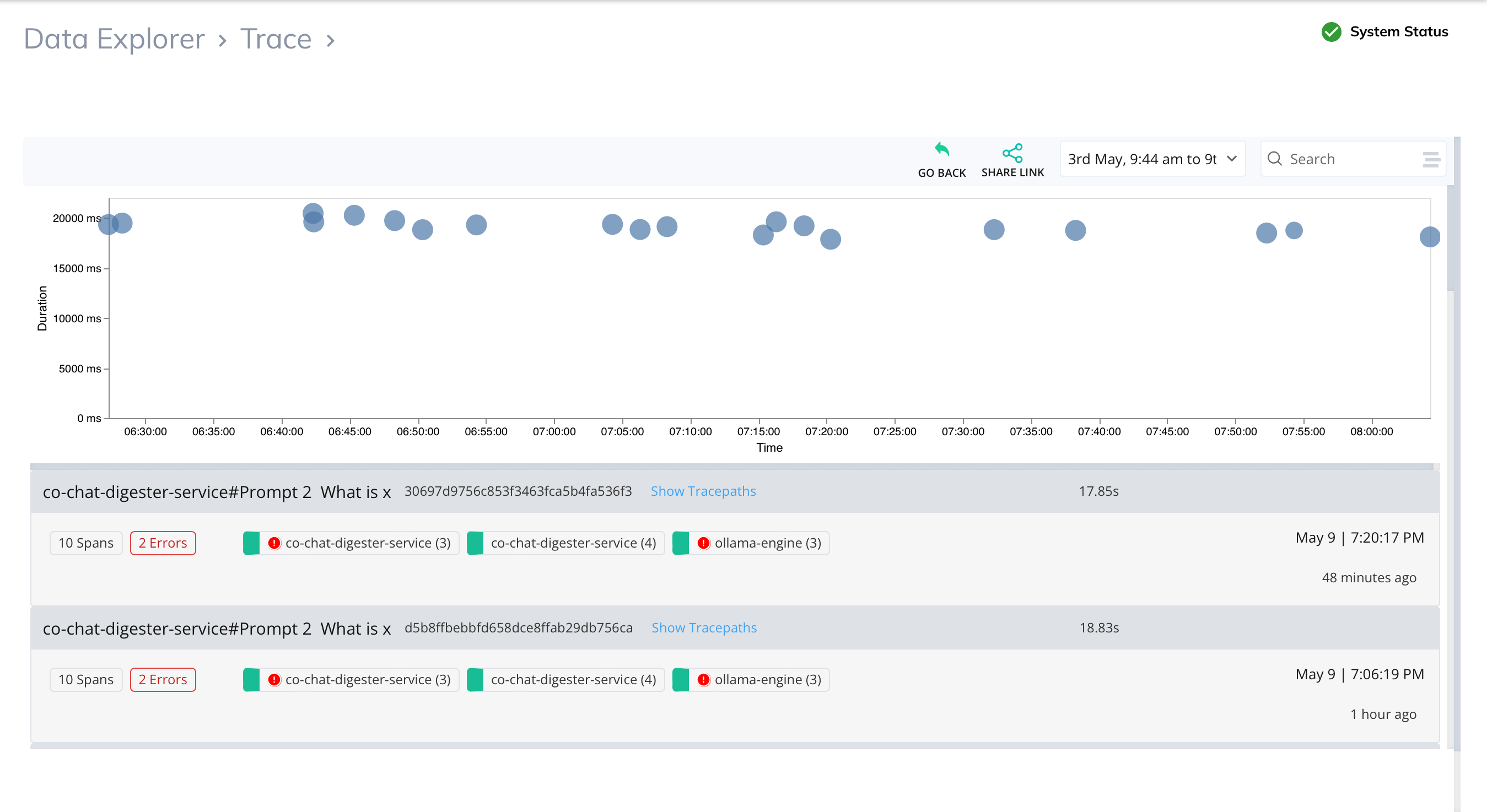

Accelerate RCA for AI Training Failures

- Reduce MTTR by pinpointing the exact cause of training job failures.

- Surface hardware issues like port resets, GPU throttling, or switch outages.

- Save days of debugging time and avoid repeated training runs.

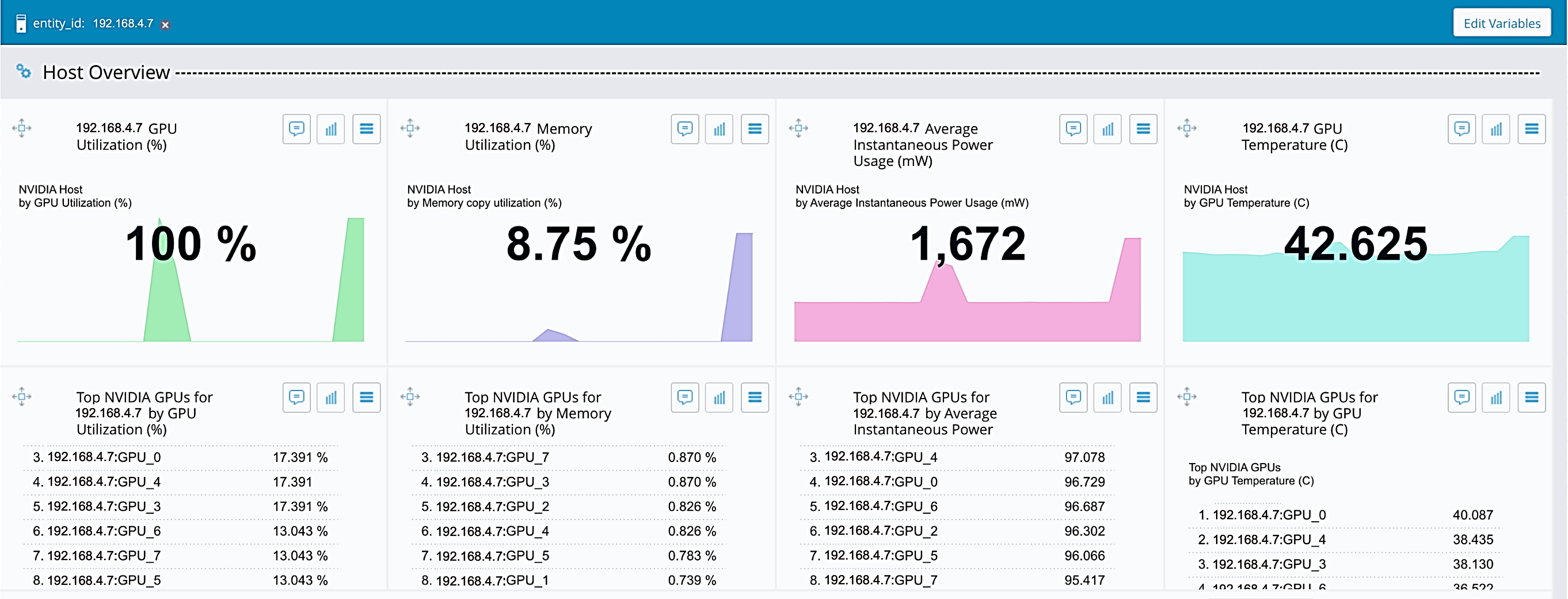

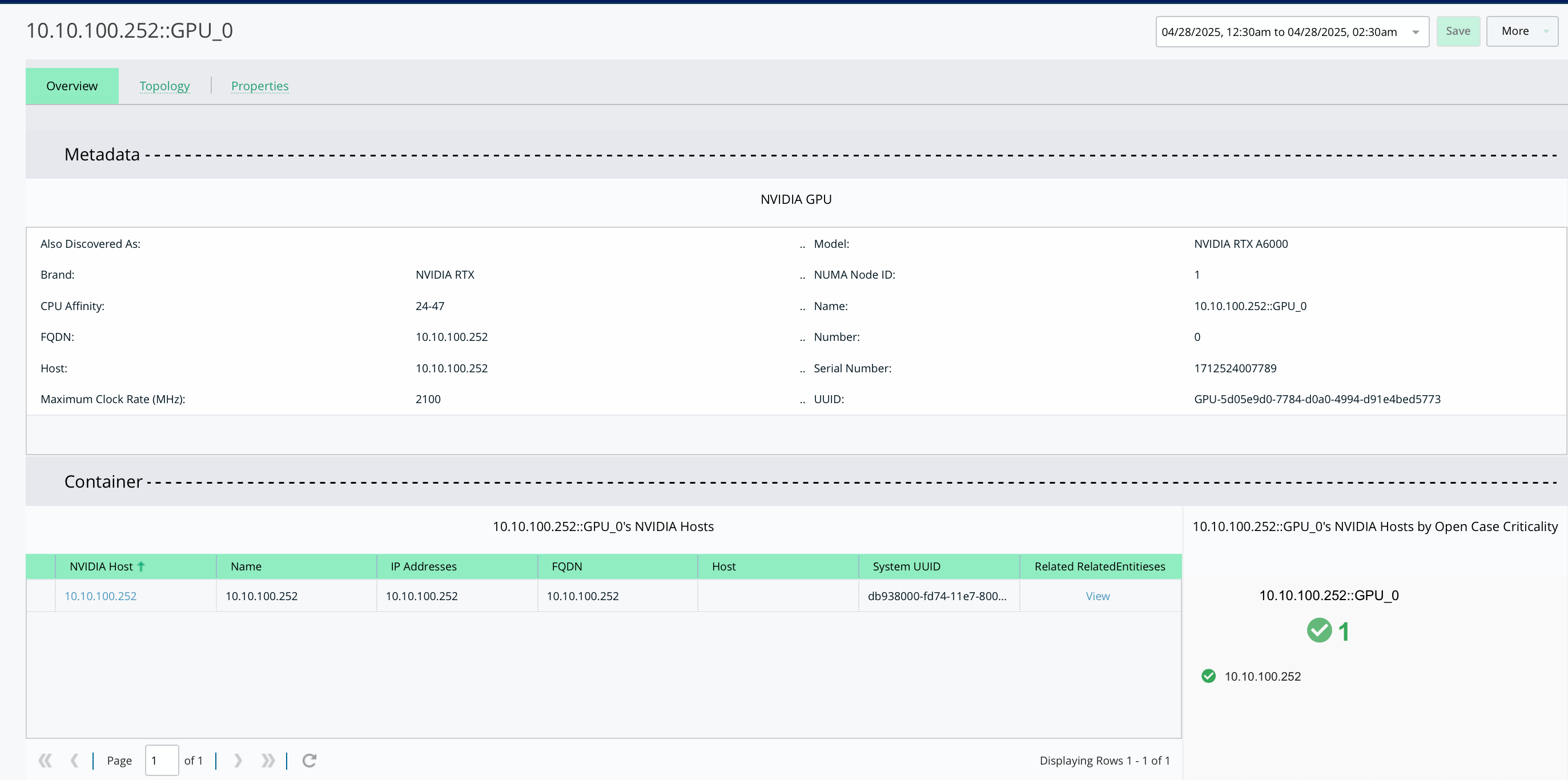

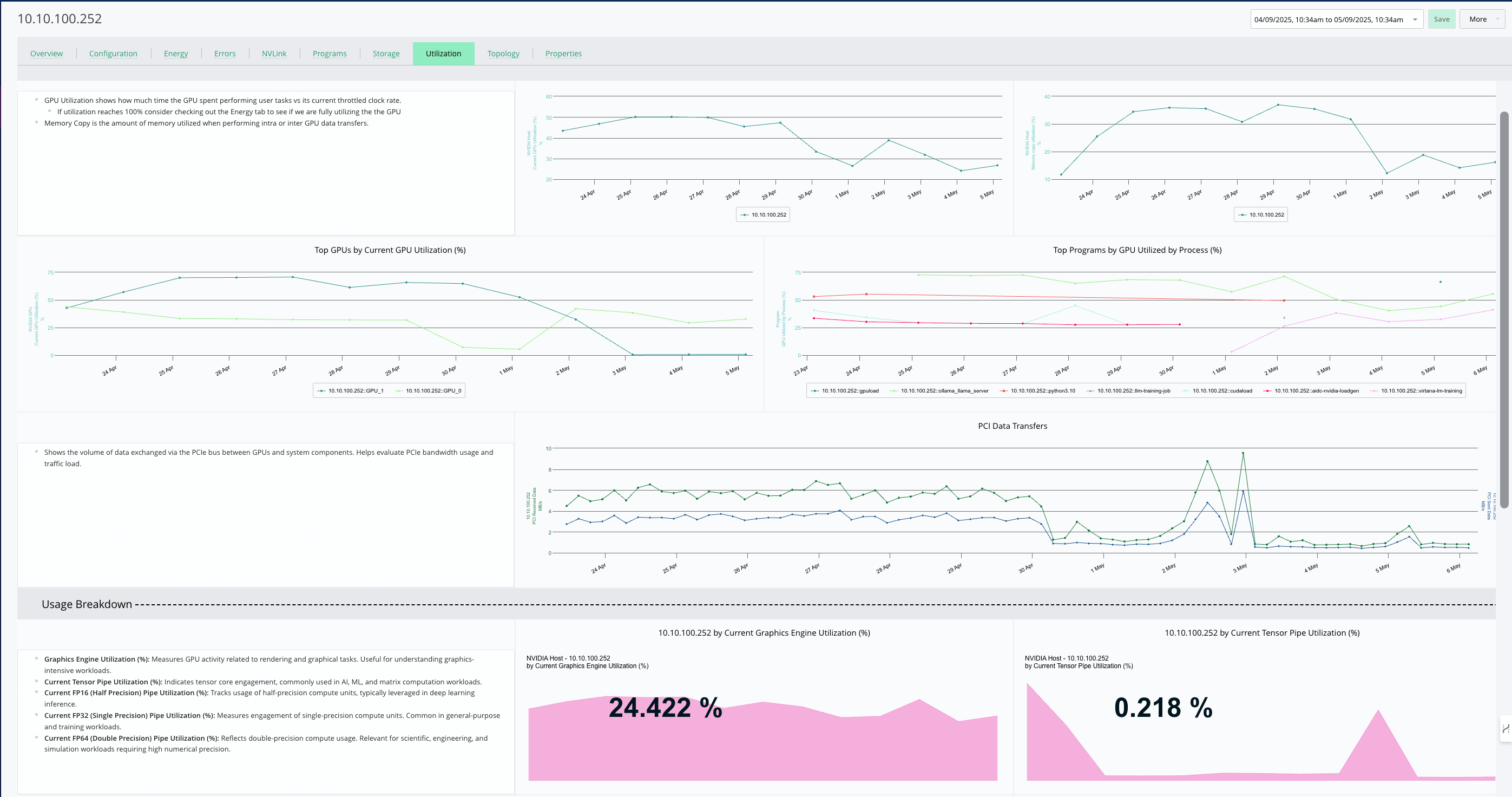

Monitor GPU Health and Performance at Scale

- Track utilization, thermal metrics, ECC errors, memory consumption, and tensor core activity.

- Gain visibility across heterogeneous environments, including NVIDIA.

- Identify resource contention before it impacts model performance.

Visualize Distributed AI Workloads and Bottlenecks

- Profile multi-node training jobs to uncover stragglers or sync delays.

- View end-to-end traces with OpenTelemetry to correlate app behavior and infra impact.

- Understand how infrastructure variations affect performance and cost.

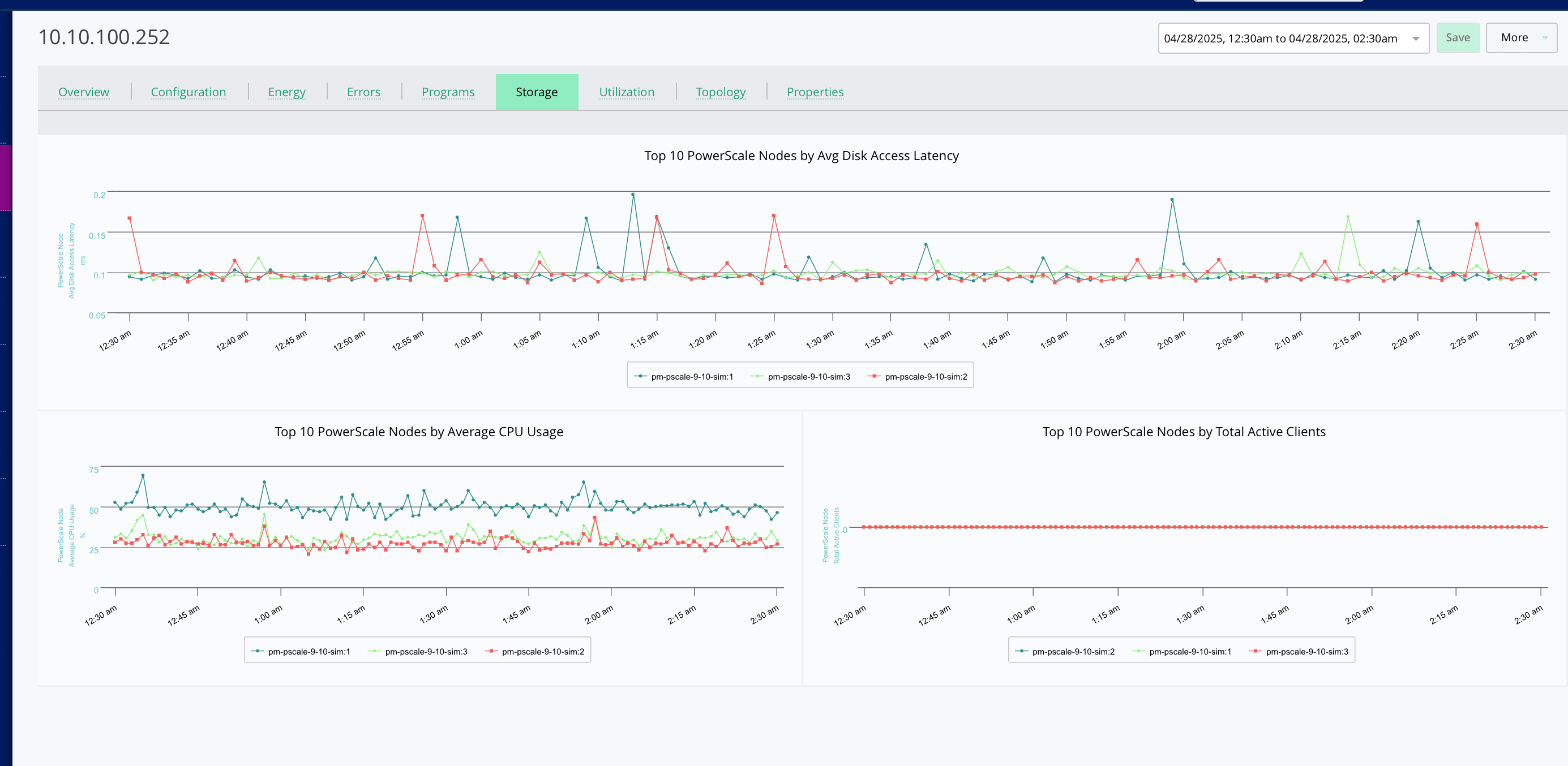

Identify Storage and Network Issues Impacting AI Pipelines

- Detect hidden IO latency, packet loss, or switch port congestion.

- Monitor bandwidth and throughput tied directly to model input/output performance.

- Prevent pipeline degradation that can slow down inference or retraining.

Improve Resource Efficiency and Cost Control

- Detect idle and overutilized GPUs, misconfigured environments, or underutilized hosts.

- Optimize infrastructure allocation to avoid overprovisioning.

- Reduce cloud and data center spend without compromising performance.

Plan with Confidence Using Real-Time Telemetry

- Analyze usage trends for GPUs, network, and storage to inform capacity planning.

- Make proactive decisions with data-driven recommendations.

- Prepare infrastructure for evolving AI demands across hybrid environments.